Cyber Signals Issue 6

Every day more than 2.5 billion cloud-based, AI-driven detections protect Microsoft customers.

Track down your adversaries in the free cybersecurity detective game. Play now.

Every day more than 2.5 billion cloud-based, AI-driven detections protect Microsoft customers.

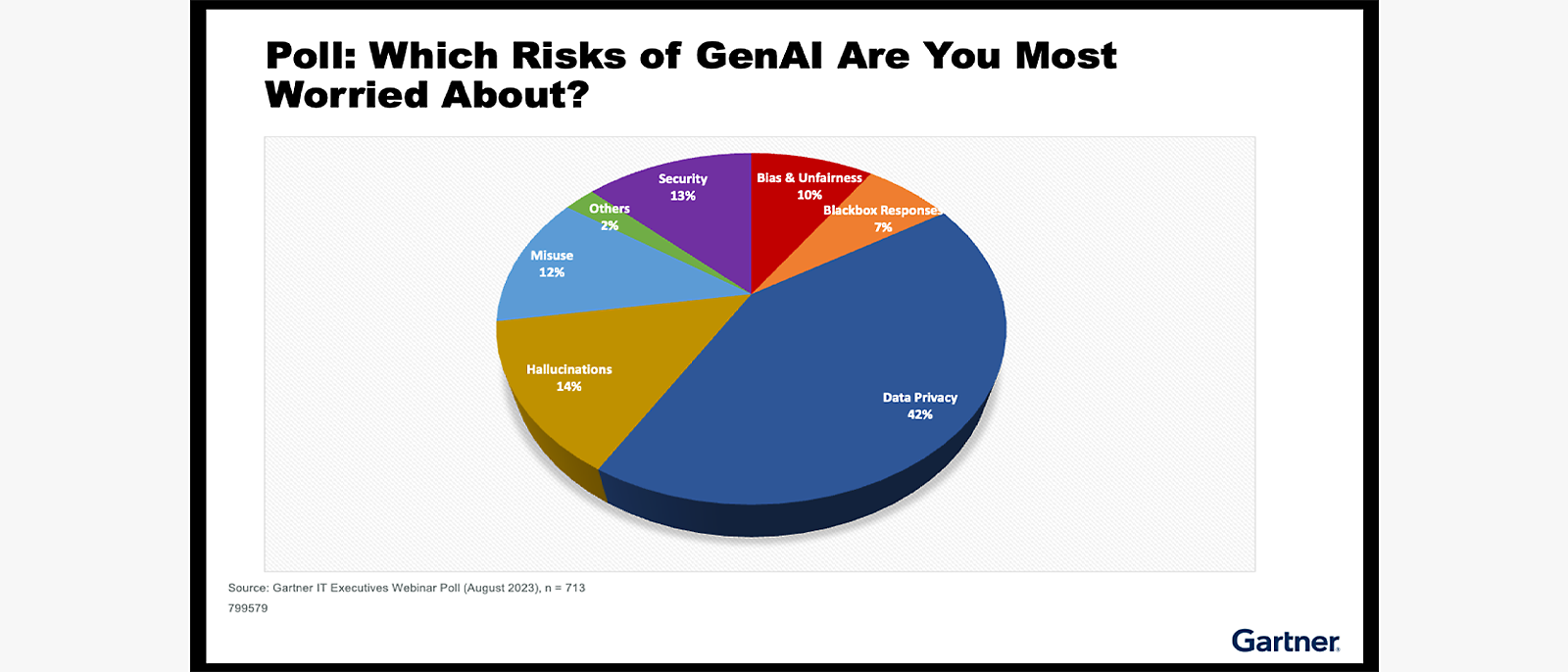

Today the world of cybersecurity is undergoing a massive transformation. Artificial intelligence (AI) is at the forefront of this change, posing both a threat and an opportunity. While AI has the potential to empower organizations to defeat cyberattacks at machine speed and drive innovation and efficiency in threat detection, hunting, and incident response, adversaries can use AI as part of their exploits. It’s never been more critical for us to design, deploy, and use AI securely.

At Microsoft, we are exploring the potential of AI to enhance our security measures, unlock new and advanced protections, and build better software. With AI, we have the power to adapt alongside evolving threats, detect anomalies instantly, respond swiftly to neutralize risks, and tailor defenses for an organization’s needs.

AI can also help us overcome another of the industry’s biggest challenges. In the face of a global cybersecurity workforce shortage, with roughly 4 million cybersecurity professionals needed worldwide, AI has the potential to be a pivotal tool to close the talent gap and to help defenders be more productive.

We’ve already seen in one study how Copilot for Security can help security analysts regardless of their expertise level—across all tasks, participants were 44 percent more accurate and 26 percent faster.

As we look to secure the future, we must ensure that we balance preparing securely for AI and leveraging its benefits, because AI has the power to elevate human potential and solve some of our most serious challenges.

A more secure future with AI will require fundamental advances in software engineering. It will require us to understand and counter AI-driven threats as essential components of any security strategy. And we must work together to build deep collaboration and partnerships across public and private sectors to combat bad actors.

As part of this effort and our own Secure Future Initiative, OpenAI and Microsoft are today publishing new intelligence detailing threat actors’ attempts to test and explore the usefulness of large language models (LLMs) in attack techniques.

We hope this information will be useful across industries as we all work towards a more secure future. Because in the end, we are all defenders.

Watch the Cyber Signals digital briefing where Vasu Jakkal, Corporate Vide President of Microsoft Security Business interviews key threat intelligence experts on cyberthreats in the age of AI, ways Microsoft is using AI for enhanced security, and what organizations can do to help strengthen defenses.

The cyberthreat landscape has become increasingly challenging with attackers growing more motivated, more sophisticated, and better resourced. Threat actors and defenders alike are looking to AI, including LLMs, to enhance their productivity and take advantage of accessible platforms that could suit their objectives and attack techniques.

Given the rapidly evolving threat landscape, today we are announcing Microsoft’s principles guiding our actions that mitigate the risk of threat actors, including advanced persistent threats (APTs), advanced persistent manipulators (APMs) and cybercriminal syndicates, using AI platforms and APIs. These principles include identification and action against malicious threat actor’s use of AI, notification to other AI service providers, collaboration with other stakeholders, and transparency.

Although threat actors’ motives and sophistication vary, they share common tasks when deploying attacks. These include reconnaissance, such as researching potential victims’ industries, locations, and relationships; coding, including improving software scripts and malware development; and assistance with learning and using both human and machine languages.

In collaboration with OpenAI, we are sharing threat intelligence showing detected state affiliated adversaries—tracked as Forest Blizzard, Emerald Sleet, Crimson Sandstorm, Charcoal Typhoon, and Salmon Typhoon— using LLMs to augment cyberoperations.

The objective of Microsoft’s research partnership with OpenAI is to ensure the safe and responsible use of AI technologies like ChatGPT, upholding the highest standards of ethical application to protect the community from potential misuse.

Emerald Sleet’s use of LLMs involved research into think tanks and experts on North Korea, as well as content generation likely to be used in spear phishing campaigns. Emerald Sleet also interacted with LLMs to understand publicly known vulnerabilities, troubleshoot technical issues, and for assistance with using various web technologies.

Another China-backed group, Salmon Typhoon, has been assessing the effectiveness of using LLMs throughout 2023 to source information on potentially sensitive topics, high-profile individuals, regional geopolitics, US influence, and internal affairs. This tentative engagement with LLMs could reflect both a broadening of its intelligence-gathering toolkit and an experimental phase in assessing the capabilities of emerging technologies.

We have taken measures to disrupt assets and accounts associated with these threat actors and shape the guardrails and safety mechanisms around our models.

AI-powered fraud is another critical concern. Voice synthesis is an example of this, where a three-second voice sample can train a model to sound like anyone. Even something as innocuous as your voicemail greeting can be used to get a sufficient sampling.

Much of how we interact with each other and conduct business relies on identity proofing, such as recognizing a person’s voice, face, email address, or writing style.

It’s crucial that we understand how malicious actors use AI to undermine longstanding identity proofing systems so we can tackle complex fraud cases and other emerging social engineering threats that obscure identities.

AI can also be used to help companies disrupt fraud attempts. Although Microsoft discontinued our engagement with a company in Brazil, our AI systems detected its attempts to reconstitute itself to re-enter our ecosystem.

The group continually attempted to obfuscate its information, conceal ownership roots, and reenter, but our AI detections used nearly a dozen risk signals to flag the fraudulent company and associate it with previously recognized suspect behavior, thereby blocking its attempts.

Microsoft is committed to responsible human led AI featuring privacy and security with humans providing oversight, evaluating appeals, and interpreting policies and regulations.

Microsoft detects a tremendous amount of malicious traffic—more than 65 trillion cybersecurity signals per day. AI is boosting our ability to analyze this information and ensure that the most valuable insights are surfaced to help stop threats. We also use this signal intelligence to power Generative AI for advanced threat protection, data security, and identity security to help defenders catch what others miss.

Microsoft uses several methods to protect itself and customers from cyberthreats, including AI-enabled threat detection to spot changes in how resources or traffic on the network are used; behavioral analytics to detect risky sign-ins and anomalous behavior; machine learning (ML) models to detect risky sign-ins and malware; Zero Trust models where every access request must be fully authenticated, authorized, and encrypted; and device health verification before a device can connect to a corporate network.

Because threat actors understand that Microsoft uses multifactor authentication (MFA) rigorously to protect itself—all our employees are set up for MFA or passwordless protection—we’ve seen attackers lean into social engineering in an attempt to compromise our employees.

Hot spots for this include areas where things of value are being conveyed, such as free trials or promotional pricing of services or products. In these areas, it isn’t profitable for attackers to steal one subscription at a time, so they attempt to operationalize and scale those attacks without being detected.

Naturally, we build AI models to detect these attacks for Microsoft and our customers. We detect fake students and school accounts, fake companies or organizations that have altered their firmographic data or concealed their true identities to evade sanctions, circumvent controls, or hide past criminal transgressions like corruption convictions, theft attempts, etc.

The use of GitHub Copilot, Microsoft Copilot for Security, and other copilot chat features integrated into our internal engineering and operations infrastructure helps prevent incidents that could impact operations.

To address email threats, Microsoft is improving capabilities to glean signals besides an email’s composition to understand if it is malicious. With AI in the hands of threat actors, there has been an influx of perfectly written emails that improve upon the obvious language and grammatical errors which often reveal phishing attempts, making phishing attempts harder to detect.

Continued employee education and public awareness campaigns are needed to help combat social engineering, which is the one lever that relies 100 percent on human error. History has taught us that effective public awareness campaigns work to change behavior.

Microsoft anticipates that AI will evolve social engineering tactics, creating more sophisticated attacks including deepfakes and voice cloning, particularly if attackers find AI technologies operating without responsible practices and built-in security controls.

Prevention is key to combating all cyberthreats, whether traditional or AI-enabled.

Get more insights around AI from Homa Hayatafar, Principal Data and Applied Science Manager, Detection Analytics Manager.

Traditional tools no longer keep pace with the threats posed by cybercriminals. The increasing speed, scale, and sophistication of recent cyberattacks demand a new approach to security. Additionally, given the cybersecurity workforce shortage, and with cyberthreats increasing in frequency and severity, bridging this skills gap is an urgent need.

AI can tip the scales for defenders. One recent study of Microsoft Copilot for Security (currently in customer preview testing) showed increased security analyst speed and accuracy, regardless of their expertise level, across common tasks like identifying scripts used by attackers, creating incident reports, and identifying appropriate remediation steps.1

Follow Microsoft Security